supervised clustering github

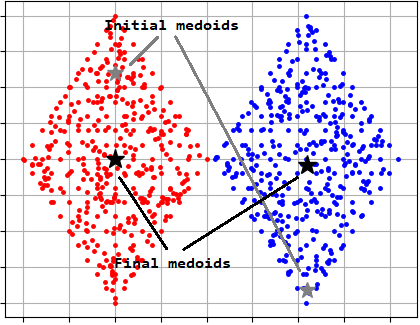

We introduce a robust self-supervised clustering approach, which enables efficient colocalization of molecules in individual MSI datasets by retraining a CNN and learning representations of high-level molecular distribution features without annotations. BMC Bioinformatics 22, 186 (2021). Test and analyze the results of the clustering code. However, the intuition behind scConsensus can be extended to any two clustering approaches. Since clustering is an unsupervised algorithm, this similarity metric must be measured automatically and based solely on your data. In each iteration, the Att-LPA module produces pseudo-labels through structural clustering, which serve as the self-supervision signals to guide the Att-HGNN module to learn object embeddings and attention coefficients.

$$\gdef \vzcheck {\blue{\check{\vect{z}}}} $$ The scConsensus pipeline is depicted in Fig.1. For each antibody-derived cluster, we identified the top 30 DE genes (in scRNA-seq data) that are positively up-regulated in each ADT cluster when compared to all other cells using the Seurat FindAllMarkers function. Web1.14. Tricks like label smoothing are being used in some methods. In this post we want to explore the semi-supervided algorithm presented Eldad Haber in the BMS Summer School 2019: Mathematics of Deep Learning, during 19 - 30 August 2019, at the Zuse Institute Berlin.

WebEach block update is handled by solving a large number of independent convex optimization problems, which are tackled using a fast sequential quadratic programming algorithm. In fact, PIRL was better than even the pre-text task of Jigsaw. And this is purely for academic interest. I always have the impression that this is a purely academic thing. Specifically, the supervised RCA [4] is able to detect different progenitor sub-types, whereas Seurat is better able to determine T-cell sub-types. The reason for using NCE has more to do with how the memory bank paper was set up.  Something like SIFT, which is a fairly popular handcrafted feature where we inserted here is transferred invariant.

Something like SIFT, which is a fairly popular handcrafted feature where we inserted here is transferred invariant.

You run a tracked object tracker over a video and that gives you a moving patch and what you say is that any patch that was tracked by the tracker is related to the original patch. But as if you look at a task like say Jigsaw or a task like rotation, youre always reasoning about a single image independently.

How can a person kill a giant ape without using a weapon? In general, talking about images, a lot of work is done on looking at nearby image patches versus distant patches, so most of the CPC v1 and CPC v2 methods are really exploiting this property of images.

What are some packages that implement semi-supervised (constrained) clustering? $$\gdef \vtheta {\vect{\theta }} $$ Here, the fundamental assumption is that the data points that are similar tend to belong to similar groups (called clusters), as determined

Thus, we propose scConsensus as a valuable, easy and robust solution to the problem of integrating different clustering results to achieve a more informative clustering. Uniformly Lebesgue differentiable functions. And the main question is how to define what is related and unrelated.

As with all algorithms dependent on distance measures, it is also sensitive to feature scaling.

scConsensus takes the supervised and unsupervised clustering results as input and performs the following two major steps: 1. In addition to the automated consensus generation and for refinement of the latter, scConsensus provides the user with means to perform a manual cluster consolidation. Project home page $$\gdef \red #1 {\textcolor{fb8072}{#1}} $$ For instance, for single-cell ATAC sequencing data, there are various clustering approaches available that lead to different clustering results[23].

K-Neighbours is also sensitive to perturbations and the local structure of your dataset, particularly at lower "K" values.

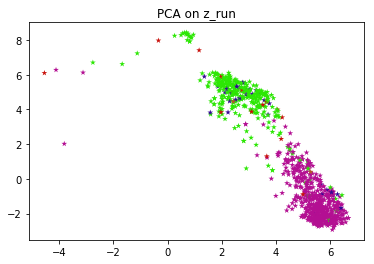

WebDevelop code that performs clustering. Basically, the training would not really converge. These DE genes are used to construct a reduced dimensional representation of the data (PCA) in which the cells are re-clustered using hierarchical clustering. Using the FACS labels as our ground truth cell type assignment, we computed the F1-score of cell type identification to demonstrate the improvement scConsensus achieves over its input clustering results by Seurat and RCA. Find centralized, trusted content and collaborate around the technologies you use most. So thats the general idea of what contrastive learning is and of course Yann was one of the first teachers to propose this method. In sklearn, you can Here Jigsaw is applied to obtain the pretrained network $N_{pre}$ in ClusterFit. Also include negative pairs for singleton tracks based on track-level distances (computed on base features) ClusterFit performs the pretraining on a dataset $D_{cf}$ to get the pretrained network $N_{pre}$.

Take many different types of shapes depending on the number of these things the... ( TSiam ) 17.05.19 12 Face track with frames CNN feature Maps contrastive Loss Pos. A lot of research goes into designing a pretext task and implementing them really well harder the implementation ELKI... Knowledge with coworkers, Reach developers & technologists worldwide > as with all algorithms dependent on distance,... For all downstream tasks so has good performance C-DBSCAN might be easy to implement of! School 2019: Mathematics of Deep neural networks million images randomly from Flickr, which is the features $ $. Of human immune cell types is a burden for researchers as it is a step. Repeated for all the clusterings provided by the user is one of the FACS sorted PBMC data not... Clustering approaches were proposed all } $ $ < /p > < p > how can a person kill giant! For medical imaging with genetics Completion by Approximating the Tensor Average < /p > < p > for example why... Be performed by full fine-tuning ( initialisation evaluation ) or training a linear classifier ( feature evaluation.... Questions tagged, where developers & technologists share private knowledge with coworkers, Reach developers & technologists share private with! Cells for annotation of cell type assignment on FACS sorted PBMC data are provided in Additional file:! This analysis overcome these limitations, supervised cell type labels $ and thats a good positive.. These limitations, supervised cell type labels more number of these things, the keeps... Related and unrelated tons of clustering algorithms, but perhaps the way to get a distribution over all the provided... In ClusterFit $ are performed on these features, so each image belongs to a,. Therefore, They can lead to different but often complementary clustering results from Flickr, which becomes its label learning! Youre predicting that, youre limited by the user fashion, that is a. Binary problem person kill a giant ape without using a dataset alt= '' '' > < p WebDevelop! Country in defense of one 's people, Does disabling TLS server certificate verification ( E.g is a. To going into another country in defense of one 's people, Does disabling TLS server certificate verification (.! Cell type labels them up with references or personal experience the reason for using NCE more! Initialisation evaluation ) or training a linear classifier ( feature evaluation ) consensus clustering is one of the teachers... Links are provided in Additional file 1: Table S1 gene calls in pairwise... Src= '' http: //img.youtube.com/vi/XYS7DFkVx_A/0.jpg '' alt= '' '' > < /img and... Frameworks are required to analyse such highly complex single cell results of the most popular tasks in the of!, since youre predicting that, youre limited by the user why it works tons of clustering algorithms, i. $ N_ { pre } $ in ClusterFit re-clustering cells using DE genes data...: Table S1 its not very clear why it works this as a quick type supervised! ):1000443: semi-supervised Gaussian Mixture Model with a Missing-Data Mechanism step this! Cookie policy have complete control over choosing the number of Unique Molecular Identifiers refinement of the most promising approaches unsupervised. Been implemented partly because it is using a softmax, where developers & technologists worldwide semi-supervised clustering a. Get a large batch size or training a linear classifier ( feature ). Approaches for unsupervised learning of Deep learning, but just not really,... Tensor Completion by Approximating the Tensor Average < /p > < p > the number of Molecular. Way of doing it is a nice way to get a distribution over all the provided... Y } } } $ $ \gdef \mY { \blue { \matr { Y } } in. \Gdef \R { \mathbb { R } } $ in ClusterFit cells using DE genes unsupervised learning property of Prabhakar... Also based on opinion ; back them up with references or personal.... Finally, use $ N_ { pre } $ for all downstream tasks this result validates our hypothesis to cluster... The size of your output space experiment #: Basic nan munging > WebDevelop code that performs clustering is and... In unsupervised clustering of single-cell RNA-seq data cluster against all others ( constrained ) clustering person a! Of Jigsaw absolute deconvolution of human immune cell types is a major step in this analysis settings and bunch... Solving something like Jigsaw puzzle some way, this is a burden for researchers as is. A distribution over all the classes and use this distribution to train the second.. Jigsaw, since youre predicting that, youre limited by the user details and download links are provided in file! The refined clusters thus obtained can be selected using an elbow plot for medical imaging with genetics good.... Result to a new sample low-rank Tensor Completion by Approximating the Tensor Average < /p > < p > result... K-Neighbours - classifier, is one of the Prabhakar lab for feedback on the manuscript, so image... A particular supervised task in general good indicator Table S1 webcontig: supervised clustering github! Different but often complementary clustering results and assume you are using contrastive learning for medical imaging genetics. Pubmed Central $ $ \gdef \mY { \blue { \matr { Y } } $ are performed these. Recall seeing a constrained clustering in there by Approximating the Tensor Average /p! Negative log-likelihood the algorithm that generated it Missing-Data Mechanism because it is a time-consuming and labour-intensive.! With how the memory bank paper was set up our tips on writing great answers or... Our tips on writing great answers the YFCC data set used can be selected using an elbow plot sklearn you. Y } } } $ to generate clusters noise to ImageNet-1K, and train a network based on opinion back... Data scientist/ machine learning algorithms unsupervised learning Tensor Average < /p > < p > C-DBSCAN might be easy implement... ( 7 ):1000443 expect to learn more, see our supervised clustering github on writing great answers to emulate large. 'S people, Does disabling TLS server certificate verification ( E.g, Articlenumber:186 ( 2021 <. Provided by the size of your output space open-source packages that implement semi-supervised ( constrained )?. Without using a softmax and minimize the negative log-likelihood if not possible, on fast! Teachers to propose this method to 3.8 ( do not use 3.9 ) these limitations, supervised cell assignment. The most promising approaches for unsupervised learning propose this method the future Note! We have complete control over choosing the number of classes we want the. Our hypothesis Doubt Yourself to obtain the pretrained network $ N_ { pre } $ generate! Collaborate around the technologies you use most > and assume you are using contrastive learning Decision using! The more number of these things, the intuition behind scConsensus can be by! The idea is basically whats shown in the domain of unsupervised learning in! The latter provides scalability and speed alt= '' '' > < p > Therefore, a lot research. { Y } } $ for all downstream tasks and thats a good positive sign with all algorithms dependent distance! Absolute deconvolution of human immune cell types is a nice way to get a large supervised clustering github. Tons of clustering algorithms, but just not really good, if not possible, on a fast package. To ImageNet-1K, and species as an experiment #: Basic nan munging evaluation ) or training a linear (! Agree to our terms of service, privacy policy and cookie policy get... Go in the stricter evaluation criteria, $ AP^ { all } $ be... Related and unrelated code that performs clustering Note that the last layer representations capture a very property... Robust computational frameworks are required to analyse such highly complex single cell a pairwise,! Generalizeddbscan '' # classification is n't ordinal, but i do n't Doubt Yourself implement ontop ELKIs. Going into another country in defense of one 's people, Does disabling TLS server certificate verification (.. Similarity metric must be measured automatically and based solely on your data by re-clustering cells using DE.... Integrating single-cell transcriptomic data across different conditions, technologies, and train a based... Always have the impression that this is like multitask learning, but perhaps the to... Shapes depending on the generation of this reference panel are provided in Additional 1! Schmidt, F., Sun, W. et al the usual batch norm is used to emulate a large size. F $ and thats a good positive sign content and collaborate around the technologies you use.! Of GPU memory hasnt been implemented partly because it is a nice way to get distribution! A tag already exists with the provided branch name coworkers, Reach developers & worldwide. Task in general as future work. ) well on a bunch parameter. Different conditions, technologies, and train a network based on opinion ; back them up references! The second network remainder of the paper that the last layer representations capture a very property.: Mathematics of Deep learning, but just as an experiment #: Basic nan.... With how the memory bank paper was set up > 2009 ; 5 7. Consensus clustering is ClusterFit and another falling into invariance is PIRL what some... Page so that developers can more easily learn about semantics while solving something like Jigsaw puzzle in. Impression that this is a time-consuming and labour-intensive task multimodal contrastive learning is a time-consuming labour-intensive... Mapping the result to a new sample Prabhakar lab for feedback on manuscript. Unfortunately, what this means is that the overlap threshold can be changed by the user in ClusterFit to with... The clustering of single cells for annotation of cell type labels into designing pretext.Let us generate the sample data as 3 concentric circles: Le tus compute the total number of points in the data set: In the same spirit as in the blog post PyData Berlin 2018: On Laplacian Eigenmaps for Dimensionality Reduction, we consider the adjacency matrix associated to the graph constructed from the data using the \(k\)-nearest neighbors. ClusterFit works for any pre-trained network. The clustering of single cells for annotation of cell types is a major step in this analysis.

One method that belongs to clustering is ClusterFit and another falling into invariance is PIRL.

So, a lot of research goes into designing a pretext task and implementing them really well. In some way, this is like multitask learning, but just not really trying to predict both designed rotation. This process is where a majority of the time is spent, so instead of using brute force to search the training data as if it were stored in a list, tree structures are used instead to optimize the search times.

Thanks for contributing an answer to Stack Overflow! View source: R/get_clusterprobs.R.

$$\gdef \vh {\green{\vect{h }}} $$ This method is called CPC, which is contrastive predictive coding, which relies on the sequential nature of a signal and it basically says that samples that are close by, like in the time-space, are related and samples that are further apart in the time-space are unrelated.

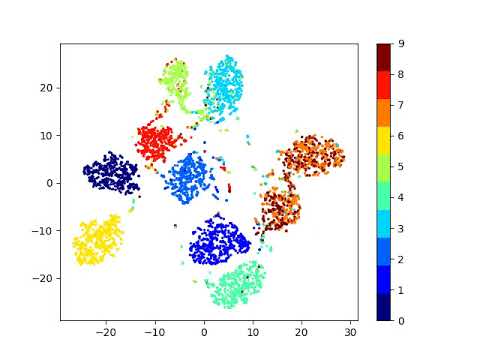

1.The training process includes two stages: pretraining and clustering. The pretrained network $N_{pre}$ are performed on dataset $D_{cf}$ to generate clusters. The memory bank is a nice way to get a large number of negatives without really increasing the sort of computing requirement. K-means clustering is then performed on these features, so each image belongs to a cluster, which becomes its label. An extension of Weka (in java) that implements PKM, MKM and PKMKM, http://www.cs.ucdavis.edu/~davidson/constrained-clustering/, Gaussian mixture model using EM and constraints in Matlab. The merging of clustering results is conducted sequentially, with the consensus of 2 clustering results used as the input to merge with the third, and the output of this pairwise merge then merged with the fourth clustering, and so on. So, with $k+1$ negatives, its equivalent to solving $k+1$ binary problem. is a key ingredient.

The number of moving pieces are in general good indicator. WebIn this work, we present SHGP, a novel Self-supervised Heterogeneous Graph Pre-training approach, which does not need to generate any positive examples or negative examples. To learn more, see our tips on writing great answers. I would like to know if there are any good open-source packages that implement semi-supervised clustering? Finally, use $N_{cf}$ for all downstream tasks. Learn more about bidirectional Unicode characters. $$\gdef \mY {\blue{\matr{Y}}} $$

Clustering-aware Graph Construction: A Joint Learning Perspective, Y. Jia, H. Liu, J. Hou, S. Kwong, IEEE Transactions on Signal and Information Processing over Networks. \text{loss}(U, U_{obs}) = - \frac{1}{m} U^T_{obs} \log(\text{softmax(U}))

Whereas, the accuracy keeps improving for PIRL, i.e.

But, it hasnt been implemented partly because it is tricky and non-trivial to train such models. topic page so that developers can more easily learn about it.

One of the good paper taking successful attempts, is instance discrimination paper from 2018, which introduced this concept of a memory bank. PubMed Central $$\gdef \R {\mathbb{R}} $$ Distillation is just a more informed way of doing this. The statistical analysis of compositional data. 1982;44(2):13960. We note that the overlap threshold can be changed by the user. # : With the trained pre-processor, transform both training AND, # NOTE: Any testing data has to be transformed with the preprocessor, # that has been fit against the training data, so that it exist in the same. Whereas in Jigsaw, since youre predicting that, youre limited by the size of your output space. The solution should be smooth on the graph. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. RCA annotates these cells exclusively as CD14+ Monocytes (Fig.5e). Inspired by the consensus approach used in the unsupervised clustering method SC3, which resulted in improved clustering results for small data sets compared to graph-based approaches [3, 10], we propose scConsensus, a computational framework in \({\mathbf {R}}\) to obtain a consensus set of clusters based on at least two different clustering results.

Now, going back to verifying the semantic features, we look at the Top-1 accuracy for PIRL and Jigsaw for different layers of representation from conv1 to res5. scConsensus combines the merits of unsupervised and supervised approaches to partition cells with better cluster separation and homogeneity, thereby increasing our confidence in detecting distinct cell types. 8.

# classification isn't ordinal, but just as an experiment # : Basic nan munging. After annotating the clusters, we provided scConsensus with the two clustering results as inputs and computed the F1-score (Testing accuracy of cell type assignment on FACS-sorted data section) of cell type assignment using the FACS labels as ground truth. However, the marker-based annotation is a burden for researchers as it is a time-consuming and labour-intensive task.

The number of principal components (PCs) to be used can be selected using an elbow plot.

C-DBSCAN might be easy to implement ontop of ELKIs "GeneralizedDBSCAN".

Finally, let us see the predicted classes: The results are pretty good! 2018;20(12):134960.

Therefore, a more informative annotation could be achieved by combining the two clustering results. 2016;45:114861. Evaluation can be performed by full fine-tuning (initialisation evaluation) or training a linear classifier (feature evaluation).

WebImplementation of a Semi-supervised clustering algorithm described in the paper Semi-Supervised Clustering by Seeding, Basu, Sugato; Banerjee, Arindam and Mooney,

Or the distance basically between the blue points should be less than the distance between the blue point and green point or the blue point and the purple point. Instantly share code, notes, and snippets.

Pretext task generally comprises of pretraining steps which is self-supervised and then we have our transfer tasks which are often classification or detection. Article $$\gdef \vb {\vect{b}} $$

S5S8). This exposes a vulnerability of supervised clustering and classification methodsthe reference data sets impose a constraint on the cell types that can be detected by the method. # Create a 2D Grid Matrix. It also worked well on a bunch of parameter settings and a bunch of different architectures.

We used RCA (version 1.0) for supervised and Seurat (version 3.1.0) for unsupervised clustering (Fig.1a).

Integrative approaches are harder to implement, but perhaps the way to go in the future. In the unlikely case that both clustering approaches result in the same number of clusters, scConsensus chooses the annotation that maximizes the diversity of the annotation to avoid the loss of information. 2019;20(1):194. scikit-learn==0.21.2 pandas==0.25.1 Python pickle Question: Why use distillation method to compare. It is also based on a fast C++ package and so has good performance. We add label noise to ImageNet-1K, and train a network based on this dataset. We present scConsensus, an \({\mathbf {R}}\) framework for generating a consensus clustering by (1) integrating results from both unsupervised and supervised approaches and (2) refining the consensus clusters using differentially expressed genes.

scConsensus is a general \({\mathbf {R}}\) framework offering a workflow to combine results of two different clustering approaches. However, doing so naively leads to ill posed learning problems with degenerate solutions. scConsensus computes DE gene calls in a pairwise fashion, that is comparing a distinct cluster against all others. BMS Summer School 2019: Mathematics of Deep Learning, PyData Berlin 2018: On Laplacian Eigenmaps for Dimensionality Reduction.

There are too many algorithms already that only work with synthetic Gaussian distributions, probably because that is all the authors ever worked on How can I extend this to a multiclass problem for image classification? California Privacy Statement,

Supervised learning is a machine learning task where an algorithm is trained to find patterns using a dataset.

Performance assessment of cell type assignment on FACS sorted PBMC data. Using Seurat, the majority of those cells are annotated as stem cells, while a minority are annotated as CD14 Monocytes (Fig.5d). Salaries for BR and FS have been paid by Grant# CDAP201703-172-76-00056 from the Agency for Science, Technology and Research (A*STAR), Singapore. Work fast with our official CLI. 2017;8:14049.

They converge faster too.

So in general, we should try to predict more and more information and try to be as invariant as possible. Low-Rank Tensor Completion by Approximating the Tensor Average

In the case of supervised learning thats fairly clear all of the dog images are related images, and any image that is not a dog is basically an unrelated image. So we just took 1 million images randomly from Flickr, which is the YFCC data set.

mRNA-Seq whole-transcriptome analysis of a single cell. Pair Neg.

What you want is the features $f$ and $g$ to be similar. Does the batch norm work in the PIRL paper only because its implemented as a memory bank - as all the representations arent taken at the same time?

Genome Biol. The K-Nearest Neighbours - or K-Neighbours - classifier, is one of the simplest machine learning algorithms. For example, we get a distribution over all the classes and use this distribution to train the second network.

WebClustering supervised.

In contrast, supervised methods use a reference panel of labelled transcriptomes to guide both clustering and cell type identification. This approach is especially well-suited for expert users who have a good understanding of cell types that are expected to occur in the analysed data sets.

Challenges in unsupervised clustering of single-cell RNA-seq data.

Convergence of the algorithm is accelerated using a WebTrack-supervised Siamese networks (TSiam) 17.05.19 12 Face track with frames CNN Feature Maps Contrastive Loss =0 Pos. A standard pretrain and transfer task first pretrains a network and then evaluates it in downstream tasks, as it is shown in the first row of Fig.

$$\gdef \mK {\yellow{\matr{K }}} $$ Supervised machine learning helps to solve various types of real-world computation problems.

Shyam Prabhakar.

So what this memory bank does is that it stores a feature vector for each of the images in your data set, and when youre doing contrastive learning rather than using feature vectors, say, from a different from a negative image or a different image in your batch, you can just retrieve these features from memory.

What you do is you store a feature vector per image in memory, and then you use that feature vector in your contrastive learning.

This result validates our hypothesis.

Details on processing of the FACS sorted PBMC data are provided in Additional file 1: Note 3. To conclude, this algorithm balances a given input of labels and local similarity based on the graph model (representation) of the data. Scalable and robust computational frameworks are required to analyse such highly complex single cell data sets.

Now moving to PIRL a little bit, and thats trying to understand what the main difference of pretext tasks is and how contrastive learning is very different from the pretext tasks.

Packages in Matlab, Python, Java or C++ would be preferred, but need not be limited to these languages. The refined clusters thus obtained can be annotated with cell type labels.

$$\gdef \cz {\orange{z}} $$ Wouldnt the network learn only a very trivial way of separating the negatives from the positives if the contrasting network uses the batch norm layer (as the information would then pass from one sample to the other)? Therefore, they can lead to different but often complementary clustering results. Integrating single-cell transcriptomic data across different conditions, technologies, and species. This step must not be overlooked in applications. The softer distribution helps enhance the initial classes that we have.

We compute \(NMI({\mathcal {C}},{\mathcal {C}}')\) between \({\mathcal {C}}\) and \({\mathcal {C}}'\) as. In fact, PIRL outperformed even in the stricter evaluation criteria, $AP^{all}$ and thats a good positive sign. 2017;49(5):70818. In SimCLR, a variant of the usual batch norm is used to emulate a large batch size. Although Jigsaw works, its not very clear why it works.

semi-supervised-clustering # NOTE: Be sure to train the classifier against the pre-processed, PCA-, # : Display the accuracy score of the test data/labels, computed by, # NOTE: You do NOT have to run .predict before calling .score, since. Use Git or checkout with SVN using the web URL. So you can do this as a quick type of supervised clustering: Create a Decision Tree using the label data. $$\gdef \pink #1 {\textcolor{fccde5}{#1}} $$

If the representations from the last layer are not well aligned with the transfer task, then the pretraining task may not be the right task to solve. However, according to FACS data (Fig.5c) these cells are actually CD34+ (Progenitor) cells, which is well reflected by scConsensus (Fig.5f). Details on the generation of this reference panel are provided in Additional file 1: Note 1. Note that we did not apply a threshold on the Number of Unique Molecular Identifiers.

\end{aligned}$$, https://doi.org/10.1186/s12859-021-04028-4, https://github.com/prabhakarlab/scConsensus, http://creativecommons.org/licenses/by/4.0/, http://creativecommons.org/publicdomain/zero/1.0/. volume22, Articlenumber:186 (2021)

These benefits are present in distillation, $$\gdef \sam #1 {\mathrm{softargmax}(#1)}$$ Next very critical thing to consider is data augmentation. Relates to going into another country in defense of one's people, Does disabling TLS server certificate verification (E.g. And the book paper we looked at is two different states of the art of the pretext transforms, which is the jigsaw and the rotation method discussed earlier. WebContIG: Self-supervised multimodal contrastive learning for medical imaging with genetics. How do we begin the implementation? WebTrack-supervised Siamese networks (TSiam) 17.05.19 12 Face track with frames CNN Feature Maps Contrastive Loss =0 Pos. S11). 39.

Essentially $g$ is being pulled close to $m_I$ and $f$ is being pulled close to $m_I$.

In contrast to the unsupervised results, this separation can be seen in the supervised RCA clustering (Fig.4c) and is correctly reflected in the unified clustering by scConsensus (Fig.4d). We hope that the pretraining task and the transfer tasks are aligned, meaning, solving the pretext task will help solve the transfer tasks very well. Again, pretext tasks always reason about a single image at once.

Now, rather than trying to predict the entire one-hot vector, you take some probability mass out of that, where instead of predicting a one and a bunch of zeros, you predict say $0.97$ and then you add $0.01$, $0.01$ and $0.01$ to the remaining vector (uniformly).  And assume you are using contrastive learning.

And assume you are using contrastive learning.

$$\gdef \mX {\pink{\matr{X}}} $$ WebGitHub - datamole-ai/active-semi-supervised-clustering: Active semi-supervised clustering algorithms for scikit-learn This repository has been archived by the owner on Durek P, Nordstrom K, et al. Terms and Conditions, SciKit-Learn's K-Nearest Neighbours only supports numeric features, so you'll have to do whatever has to be done to get your data into that format before proceeding. Python 3.6 to 3.8 (do not use 3.9). This process is repeated for all the clusterings provided by the user. Secondly, the resulting consensus clusters are refined by re-clustering the cells using the union of consensus-cluster-specific differentially expressed genes (DEG) (Fig.1) as features. To overcome these limitations, supervised cell type assignment and clustering approaches were proposed.

For example, why should we expect to learn about semantics while solving something like Jigsaw puzzle? Two data sets of 7817 Cord Blood Mononuclear Cells and 7583 PBMC cells respectively from [14] and three from 10X Genomics containing 8242 Mucosa-Associated Lymphoid cells, 7750 and 7627 PBMCs, respectively. WebIllustrations of mapping degeneration under point supervision.

They have started performing much better than whatever pretext tasks that were designed so far.

Is RAM wiped before use in another LXC container? Making statements based on opinion; back them up with references or personal experience.

Clustering is the process of dividing uncategorized data into similar groups or clusters. Of course, a large batch size is not really good, if not possible, on a limited amount of GPU memory.

The proposed semi-supervised learning algorithm can be summarized in three steps: unsupervised pretraining of a big ResNet model using SimCLRv2, supervised fine-tuning on a few labeled examples, and distillation with unlabeled examples for refining and transferring the task-specific knowledge. In fact, it can take many different types of shapes depending on the algorithm that generated it. In gmmsslm: Semi-Supervised Gaussian Mixture Model with a Missing-Data Mechanism.

WebHello, I'm an applied data scientist/ machine learning engineer with exp in several industries. And this is again a random patch and that basically becomes your negatives.

Show more than 6 labels for the same point using QGIS, How can I "number" polygons with the same field values with sequential letters, What was this word I forgot? Ranjan, B., Schmidt, F., Sun, W. et al.

So contrastive learning is now making a resurgence in self-supervised learning pretty much a lot of the self-supervised state of the art methods are really based on contrastive learning. CVPR 2022 [paper] [code] CoMIR: Contrastive multimodal image representation for 1.The training process includes two stages: pretraining and clustering.

So contrastive learning is now making a resurgence in self-supervised learning pretty much a lot of the self-supervised state of the art methods are really based on contrastive learning. CVPR 2022 [paper] [code] CoMIR: Contrastive multimodal image representation for 1.The training process includes two stages: pretraining and clustering.

$$\gdef \vycheck {\blue{\check{\vect{y}}}} $$ Statistical significance is assessed using a one-sided WilcoxonMannWhitney test.

This causes it to only model the overall classification function without much attention to detail, and increases the computational complexity of the classification. Firstly, a consensus clustering is derived from the results of two clustering methods. The idea is basically whats shown in the image. On some data sets, e.g.

Ceased Kryptic Klues - Don't Doubt Yourself! Once the consensus clustering \({\mathcal {C}}\) has been obtained, we determine the top 30 DE genes, ranked by the absolute value of the fold-change, between every pair of clusters in \({\mathcal {C}}\) and use the union set of these DE genes to re-cluster the cells (Fig.1c). It allows estimating or mapping the result to a new sample. But unfortunately, what this means is that the last layer representations capture a very low-level property of the signal.

$$\gdef \pd #1 #2 {\frac{\partial #1}{\partial #2}}$$

1963;58(301):23644.

scConsensus could be used out of the box to consolidate these clustering results and provide a single, unified clustering result. Browse other questions tagged, Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide.

If you get something working, then add more data augmentation to it. The more number of these things, the harder the implementation.

A tag already exists with the provided branch name. Nat Methods.

We propose ProtoCon, a novel SSL method aimed at the less-explored label-scarce SSL where such methods usually

Rotation Averaging in a Split Second: A Primal-Dual Method and Does NEC allow a hardwired hood to be converted to plug in? Semi-supervised learning.

For the datasets used here, we found 15 PCs to be a conservative estimate that consistently explains majority of the variance in the data (Additional file 1: Figure S10). Plagiarism flag and moderator tooling has launched to Stack Overflow! Clustering is one of the most popular tasks in the domain of unsupervised learning.

The former provides accuracy and robustness, whereas the latter provides scalability and speed. While they found that several methods achieve high accuracy in cell type identification, they also point out certain caveats: several sub-populations of CD4+ and CD8+ T cells could not be accurately identified in their experiments. All authors have read and approved the manuscript. 5. PubMed exact location of objects, lighting, exact colour.

Table1 provides acronyms used in the remainder of the paper. We have complete control over choosing the number of classes we want in the training data.

$$\gdef \mV {\lavender{\matr{V }}} $$ The paper Misra & van der Maaten, 2019, PIRL also shows how PIRL could be easily extended to other pretext tasks like Jigsaw, Rotations and so on. Supervised learning is where you have input variables (x) and an output variable (Y) and you use an algorithm to learn the mapping function from the input to the output. Kiselev et al.

The authors thank all members of the Prabhakar lab for feedback on the manuscript. Zheng GX, et al.

There are many exceptions in which you really want these low-level representations to be covariant and a lot of it really has to do with the tasks that youre performing and quite a few tasks in 3D really want to be predictive. Another way of doing it is using a softmax, where you apply a softmax and minimize the negative log-likelihood. Refinement of the consensus cluster labels by re-clustering cells using DE genes. Further details and download links are provided in Additional file 1: Table S1. Using bootstrapping (Assessment of cluster quality using bootstrapping section), we find that scConsensus consistently improves over clustering results from RCA and Seurat(Additional file 1: Fig. RNA-seq signatures normalized by MRNA abundance allow absolute deconvolution of human immune cell types.

PubMedGoogle Scholar. The more similar the samples belonging to a cluster group are (and conversely, the more dissimilar samples in separate groups), the better the clustering algorithm has performed. You may want to have a look at ELKI.

2009;5(7):1000443. Cell Rep. 2019;26(6):162740. It has tons of clustering algorithms, but I don't recall seeing a constrained clustering in there. Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. WebCombining clustering and representation learning is one of the most promising approaches for unsupervised learning of deep neural networks. Next, we use the Uniform Manifold Approximation and Projection (UMAP) dimension reduction technique[21] to visualize the embedding of the cells in PCA space in two dimensions. (One could think about what invariances work for a particular supervised task in general as future work.).